ESPnet, which has more than 7,500 commits on github, was originally focused on automatic speech recognition (ASR) and text-to-speech (TTS) code. It has recently been updated to include code for building machine translation systems, and now professes to be an “all-on-one toolkit that should make it easier for both ASR and MT researchers to get started in ST research.”

New Speech-to-speech Translation Toolkit on Github

(Esther Bond for Slator)

As speech-to-speech translation (ST) has become a prominent area of interest in recent machine translation (MT) research, a group of researcher-developers have made their end-to-end speech processing toolkit publicly available to other developers.

In a paper published on pre-print server arXiv on April 21, 2020, Hirofumi Inaguma, Shun Kiyono, Kevin Duh, Shigeki Karita, Nelson Enrique, Yalta Soplin, Tomoki Hayashi, and Shinji Watanabe, presented their open-source toolkit, called ESPnet-ST.

The group of developer-researchers hail from academic institutions Kyoto University, Johns Hopkins University, Waseda University and Nagoya University, as well as a number of Japan-based organizations: research lab NTT Communication Science Laboratories; software development startup Human Dataware Lab. Co., Ltd., which focuses on machine learning software; and RIKEN AIP, an AI R&D center that aims to “achieve scientific breakthrough and to contribute to the welfare of society and humanity through developing innovative technologies.”

Kyoto University’s Hirofumi Inaguma told Slator that the team’s main motivation for developing the toolkit is to break down language-related communication barriers. In making the toolkit open-source, he hopes to help researchers to “move forward to the next breakthrough,” he said.

ESPnet-ST was designed for “the quick development of speech-to-speech translation systems in a single framework.” Rather than a traditional “cascaded model,” ESPnet-ST is an end-to-end model, which maps speech in a source language to its translation in the target language.

Microsoft-owned Github is a popular software repository for developers and allows users to share code in order to build software. Contributors can create software libraries (or “toolkits”), such as ESPnet, that contain snippets of code that other developers can re-use in their own projects. Code-sharing facilitates development because, rather than write code from scratch (known as “rolling your own”), developers can re-use existing bits of code for a popular development task such as login — to borrow a generic example — or feature extraction — to use an example from machine translation.

According to the paper, ESPnet-ST also curates code snippets into “recipes” for common speech-to-speech translation tasks such as data pre-processing, training and decoding. The researchers claim that their results are “reproducible” and can “match or even outperform the current state-of-the-art performances.” Their pre-trained models are available to download from Github.

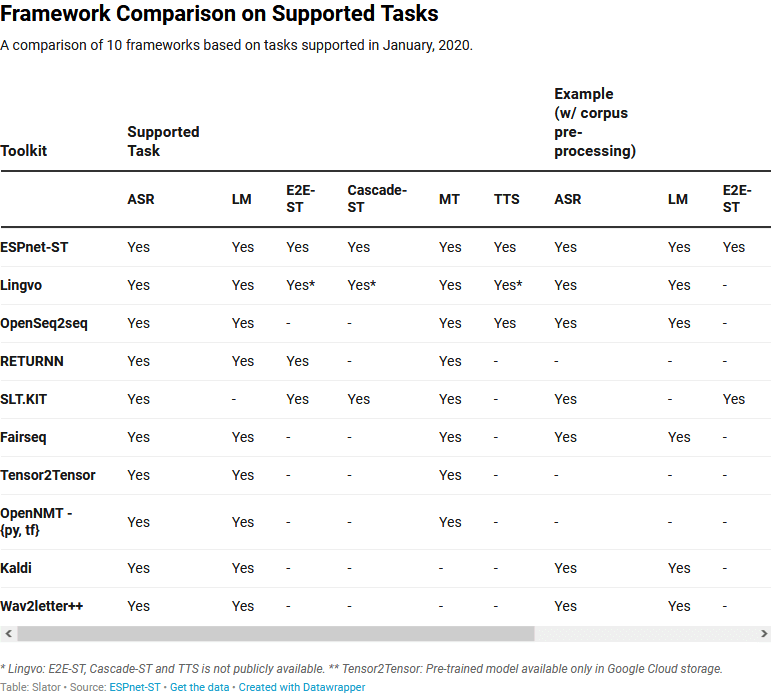

The paper compared ESPnet-ST with nine other speech-to-speech translation toolkits including Facebook’s Fairseq, Google’s OpenSeq2Seq, and OpenNMT by SYSTRAN and Ubiqus.

The researchers believe that ESPnet-ST is the first toolkit “to include ASR, MT, TTS, and ST recipes and models in the same codebase.” It is also “very easy to customize training data and models,” they said.

Looking forward to future work, the researchers said that they plan to “support more corpora and implement novel techniques to bridge the gap between end-to-end and cascaded approaches.”

Looking forward to future work, the ESPnet-ST developers said that they plan to “support more corpora and implement novel techniques to bridge the gap between end-to-end and cascaded approaches.” Inaguma also said that his current research topics focus on multilingual and streaming models, which he called “the next essential technique.”